The First Time In A Long Time That Google Felt Stupid

In pushing the Google Assistant to its limits, the tech titan has revealed that it’s a mere mortal.

When is the last time Google felt anything short of omniscient? This ethereal entity has become the perfect container for email, docs, photos, and maps. Google has gotten so good at search, it knows what you’re going to type before you even finish typing it. Can you even remember the last time you felt Google really failed you?

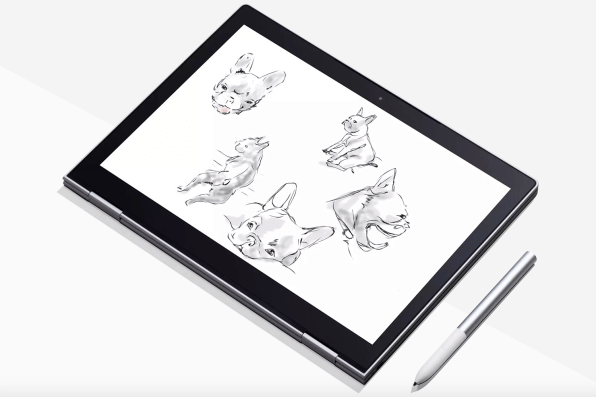

It’s not something I’d even considered until, for the first time in years, Google felt dumb. It happened when I was trying out the Pixelbook, the company’s new $1,000 touchscreen laptop. When coupled with the $100 Pixelbook Penstylus, the combination allows you to mark up photos or draw right on your screen. But it also promises something wondrous: Circle anything on your screen at any time–photos, text, buttons on the screen, there’s no limit!–and Google’s AI Assistant can tell you more about it.

“Just press and hold the pen’s button, then circle images or text on your Pixelbook,” the ad promises. “It’s a fast, smart way to get answers and help with tasks.”

Well, that’s the theory–that I can point at stuff on my screen with a Neanderthal grunt, and Google, shining obelisk, will divine my intent. But within about two minutes of testing the feature, the truth became clear. While the company promises some downright incredible functions, like circling the photo of a dog to learn its breed, or spotting a friend’s vacation photo on social media then being linked to the location where it was taken, Google often has no idea what I’m circling, let alone why I’m circling it. I’ve encountered a rare moment when Google’s front-end design has surpassed its back-end intelligence. And Google, as it turns out, can still be pretty dumb.

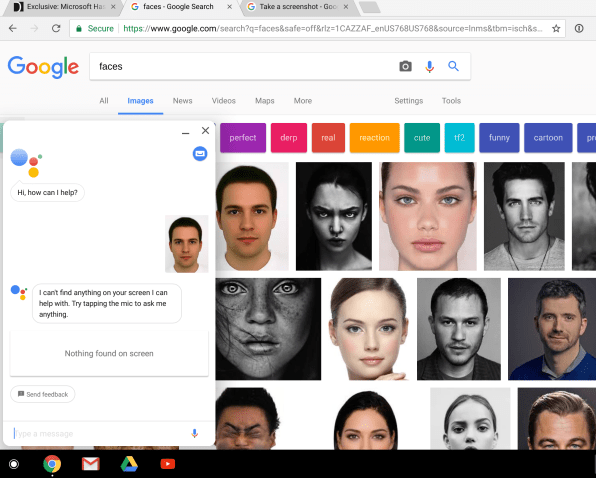

First, I highlighted a man’s face to see what I might get. To make it easy and as fair as possible, I actually got the face from a Google search! I wondered, will the Assistant see that it’s a man? Will it search for his identity? Will it spot the brand of foundation on his face? Will I just get a dozen iterations of his photo that have proliferated across the web?

As his visage appeared clearly in the Assistant chat window, Google responded, “I can’t find anything on your screen I can help with. Try tapping the mic to ask me anything.” Keep in mind, this was a face. A human face. The most painted and photographed entity of all time. It’s more than a bit ironic that the equation that melted Google was the human one.

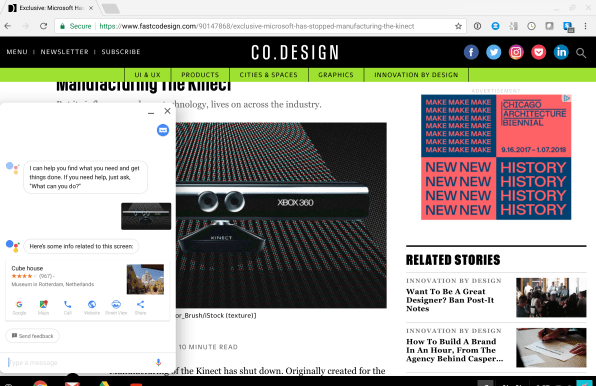

Maybe products will be easier, I thought. So on a Co.Design story about the Microsoft Kinect being discontinued, I circled the poster image. It’s a Kinect with a stylistic pattern in the back. I figured, maybe someone would see it and wonder what it is. Google Assistant guessed the Kinect was the famous Cube House. Apparently the extra styling confused the computer into thinking a piece of consumer technology was Piet Blom’s architectural landmark in Rotterdam. Later, I was able to highlight an isolated Kinect image and get a link to the product itself, but of course, I found this cleaner image only because I knew what I was searching for–a “Microsoft Kinect.” The Assistant is meant to be there for the moments that you have no words for which to search.

Highlighting words is probably the most obvious use for the Pen as Assistant. The dictionary functions work fine, but when Google tries anything more clever, it falls short. Circling “influential” brings up the definition of influential. Great! It also lists some random PR personality and chef, who I assume must be influential? Circling the slang “lampin'”–recently made extra infamous viaCurb Your Enthusiasm–Google had no immediate definition at all. But it was happy to point me to a search, allowing me to view the definition on Urban Dictionary.

In other words, searching text works for most definitions, but Google either feels compelled to guess something more–like some random “influential” person–or gives up and effectively tells me to “Google it.” It’s a fate that’s actuallyworse than the company just burning me with a perfectly timed “let me Google that for you.”

I think about how much more elegantly Amazon’s Kindle app handles this task. You highlight a word, and assuming you’ve downloaded the free dictionary, you get the definition right below. There’s no side Assistant box needed. There are no weird guesses. Because when you tap words on your Kindle, it’s likely with the intent to learn what they mean. That user intent is inherently constrained by the context of reading a novel.

Google is biting off a much larger problem with the Assistant for your entire screen. You could be tapping on any type of media in any app–let alone any word for any context. And while I’ve confirmed with Google that, yes, it does know the app you’re inside and take that along with other metadata into account, it still feels like a case where the user actually has a lot more context for their query than the system does.

In a recipe app, I might be highlighting a recipe just because I want to save it to my recipe collection. While in a spreadsheet, I might be circling a box because I want the Assistant to calculate a total for me. Forecasting these user intents requires near-psychic powers. It’s as if the Assistant is a clueless intern, forever running in late to meetings with no idea about the topic but chiming in with senseless advice anyway.

Google admits that visual recognition is among the hardest problems in computing–and in that sense, critiques like mine are armchair quarterbacking. Maybe Google will collect so much data from the Pen that it will inevitably get better at guessing your intent. Pinterest has been using a similar tool to allow searching within its photos for a few years now. With mountains of new information to train its machines, Google may one day just know what we want with our point-and-grunt searches as fluently as it predicts our queries within the four narrow walls of the ubiquitous white Search bar. That’s the hope of Google’s equally disappointing visual search tool, Google Lens.

But until then we should spend neither the time nor the money to be Google’s Pen-wielding lab rats–unlike the way we treat most Google products, which are either free or useful enough that we can forgive the company’s perpetual state of beta.

0 comments: